In the end of January, RE-WORK organized a Virtual Assistant Summit, which took place in San Francisco at the same time as RE-WORK Deep Learning Summit.

Craig Villamor wrote a nice overview of key things discussed on the summit.

I didn’t attend these conferences, but I watched a few presentations which RE-Work kindly uploaded to YouTube. I would like to share notes I took while watching these videos. I could have misinterpreted something, so please keep that in mind, and watch original videos for more details.

Dennis Mortensen, CEO & Founder, x.ai

Two decades ago if you wanted to use a software, you needed to install it on your desktop and use it only from your desktop. Then Salesforce came along and told us that it’s not necessary and you can use software from everywhere. Then Apple came along and told us that there is an app for that. Applications became mobile. The next paradigm is the one where intelligent agents arrive.

In the old paradigm user had to understand the objective, learn the interface and then work towards the objective. In the new paradigm you just tell the agent what you want to be done, and the agent works on that. There is nothing user can touch there. We need some new setting here where we see the syntax disappear and people move from little tasks to jobs.

There are two choices. Do you create it as a traditional piece of software where user descries the objective, or you humanize it? When humanizing the agent, you democratize the software. This will be black and white, you can’t be anything in between. Either you humanize it or you don’t.

Who do you hire to humanize your agents, who will design that persona? Engineering bot rules is not humanizing, it’s just moving from pixels to text.

x.ai doesn’t try to fool people that their bot Amy is a human. They tell up-front that it is a machine, and then see if they can create a relationship.

Many users treat the bot like a human. They thank Amy, ask if she will be joining the meeting. Users even send flowers and whiskey to Amy, although they were advertised up-front that it is a machine. When they want to reschedule the meeting, instead of sending just “cancel the meeting”, they apologize and tell why they can’t make it. In 11% of the meetings which they do, at least one email has one intent – to thank Amy.

We will be degrading as humans if using systems where there is no penalty for being an asshole.

We can extract much richer dataset if we humanize it. We can tease more details from the user.

Rob’s presentation was about strategic questions in virtual assistant space.

Why the market structure matters? Market structure ultimately determines your profits. There are 3 different types of markets: existing market, resegmented market, new market. Virtual assistants is a new market.

3 key issues in Intelligent Virtual Assistant strategy.One bot vs many bots

One bot vs many bots

One bot is a hard to do, but in most cases one bot is better than many bots.

In Talla they are going to do multiple bots: one marketing bot, one sales bot, one recruiting bot, etc., and over time they expect to merge them into one bot.

Bot text stack

- Communication. A bot can communicate via one or more channels with humans and ultimately other bots.

- Skills. A bot has skills which are only performed for some humans and not others.

- Memory. A bot can access its own data store or other data stores as needed in order to collaborate.

- Privacy. A bot respects the privacy of information and communicates appropriately.

- Behavior. A bot respects the authority of different humans in order to perform appropriate tasks.

- Advanced contextual awareness. A bot is aware of time, space, people, and other contexts. It is able to act on them independent of human interaction.

Integrated approach, when one company is doing all layers of the stack, is better, but it’s more expensive. Companies need to bet which layer is more important. The ecosystem can change over time, and it will impact our decisions on layers to work on.

Are there network effects to knowledge?

How does your assistant learn? What types of data does your assistant get access to? Are there ways to combine this data to make it more valuable?

Tim Tuttle, CEO & Founder, MindMeld

Tim was talking about voice applications.

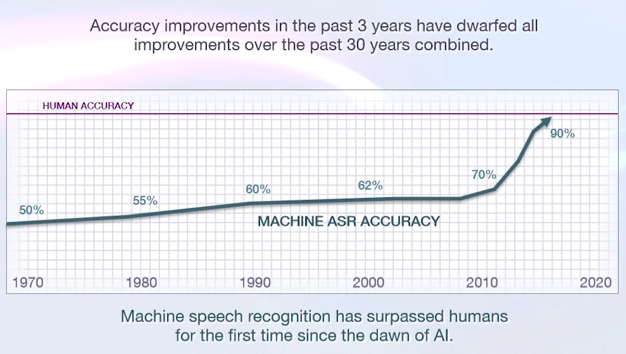

Machine speech recognition has surpassed humans for the first time since the dawn of AI. Accuracy improvements for the past 3 years have dwarfed all improvements over the past 30 years combined.

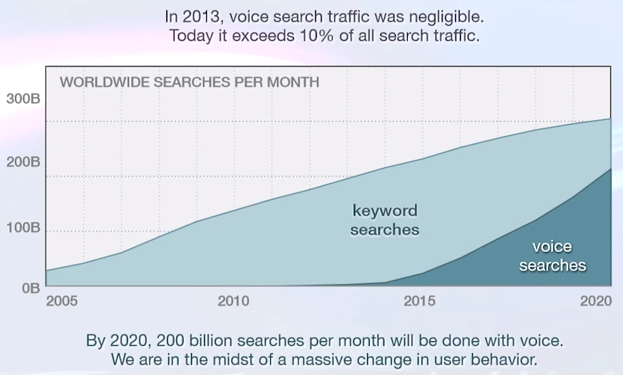

Voice search usage has started to explode. In 2013, voice search traffic was negligible. Today it exceeds 10% of all search traffic.

Developers should consider the voice type of input along with the touch and the text input.

As consumers start using voice interfaces in apps like Siri and Cortana, they will expect voice interfaces in other applications.

Siri won’t be good in many domains because applications are built on data, and Apple doesn’t have data for many applications. Yelp have a dataset for restaurants, Netflix has data about movies. In the future, all these companies which have data will start learning how to use this data in order to create this new voice experience.

The speech recognition is just one step in the pipeline.

Spoken input -> Speech recognizer -> Intent classifier -> Semantic parser -> Dialog manager -> Question answerer